We are officially announcing the launch of a new scale for rating scientific publications, which scientists may use for contributing to the assessment of publications they are reading. The rating scale has been presented at the 15th International Conference on Scientometrics and Informetrics, recently held in Istanbul, Turkey (Florian, 2015). Scientists can now use this scale to rate publications on the Epistemio website.

The use of metrics in research assessment is widely debated. Metrics are often seen as antagonistic to peer review, which remains the primary basis for evaluating research. Nevertheless, metrics can actually be based on peer review, by aggregating ratings provided by peers. This requires an appropriate rating scale.

Online ratings typically take the form of a five-star or ten-star discrete scale: this standard has been adopted by major players such as Amazon, Yelp, TripAdvisor and IMDb. However, these types of scales do not measure well the quality and importance of scientific publications, because of the likely high skewness of the actual distribution of values of this target variable. Extrapolating from distributions of bibliometric indicators, it is likely that maximum values of the target variable can be 3 to 5 orders of magnitude larger than the median value.

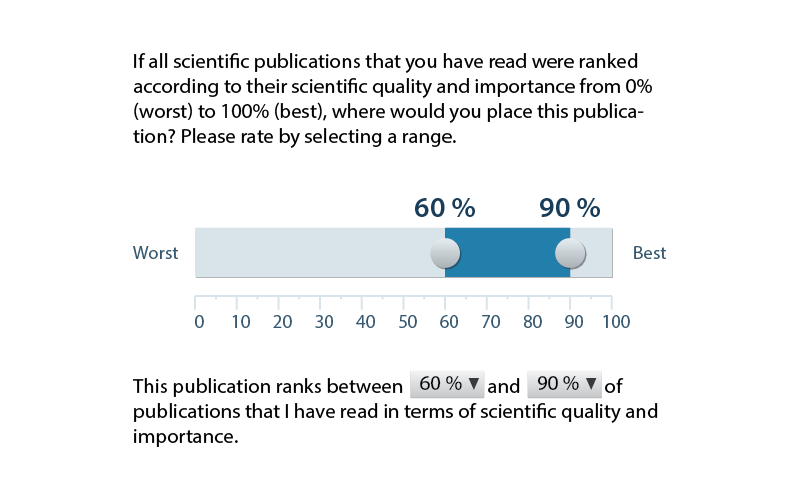

A solution to this conundrum is asking reviewers to assess not the absolute value of quality and importance, but the relative value, on a percentile ranking scale. On such a scale, the best paper is not represented by a number several orders of magnitude larger than the number representing the median paper, but just 2 times larger (100% for the best paper vs. 50% for the median paper).

It is typically possible to estimate the percentile ranking of high-quality papers with better precision than for lower quality papers (e.g., it is easier to discriminate between top 1% papers and top 2% papers than between top 21% papers and top 22% papers). Therefore, the precision in assessing the percentile ranking of a publication varies across the scale. Reviewers may also have various levels of familiarity with the field of the assessed publication. Thus, it is useful for them to be able to express their uncertainty. The solution adopted for the new scale was to allow reviewers to provide the rating as an interval of percentile rankings, rather than a single value. Scientists can additionally publish on Epistemio reviews that support their ratings.

The aggregated ratings could provide evaluative information regarding scientific publications that is much better than what is available through current methods. Importantly, if ratings are provided voluntarily by scientists for publications they are reading for the purpose of their own research, publishing such ratings entails a minor effort from scientists, of about 2 minutes per rating. Each scientist reads thoroughly, on average, about 88 scientific articles per year, and the evaluative information that scientists can provide about these articles is currently lost. If each scientist would provide one rating weekly, it can be estimated that 52% of publications would get 10 ratings or more (Florian, 2012). This would be a significant enhancement for the evaluative information needed by users of scientific publications and by decision makers that allocate resources to scientists and research organizations.

Indicators that aggregate peer-provided ratings solve some of the most important problems of bibliometric indicators:

- normalizing across fields citation-based indicators is necessary due to differences in the common practices across fields (e.g., the median impact factor or the median number of citations is larger in biology than in mathematics), but widely-available bibliometric indicators are not normalized by their providers;

- in some fields, publishing in scientific journals is not the only relevant channel for publishing results, but the coverage of other types of publications (books, conference papers) in the commercially-available databases is poorer; this may be unfair for these fields, or requires arbitrary comparisons between different types of indicators.

Indicators that aggregate peer-provided ratings makes possible the unbiased comparison of publications from any field, of any type (journal papers, irrespective of whether they are present in the major databases or not; conference papers; books; chapters; preprints; software; data), regardless of the publication’s age and of whether the publication received citations or not.

How scientists can provide ratings

To start rating the publications that you have read:

- Search the publication on Epistemio;

- Click on the publication title, go to the publication’s page on Epistemio, and add your rating. Optionally, a review can be published to support the rating.

- If you are not logged on, please log in or sign up to save you rating and review.

Ratings and reviews may be signed or anonymous.

How research managers can use ratings in institutional research assessments

If the publications you would like to assess did not get enough ratings from scientists who read them and volunteered to publish on Epistemio their ratings, our Research Assessment Exercise service can select qualified reviewers and provide a sufficient number of ratings for the publications you would like to be assessed.

References

Florian, R. V. (2012). Aggregating post-publication peer reviews and ratings. Frontiers in Computational Neuroscience, 6, 31.

Florian, R. V. (2015). A new scale for rating scientific publications. In Proceedings of ISSI 2015: 15th International Society of Scientometrics and Informetrics Conference (p. 419-420). Istanbul, Turkey: Boğaziçi University.